Control LLM with Guidance: Microsofts alternative to LangChain

by Reo Ogusu, Co-founder / CTO

What is Guidance?

Guidance is a new language to control Large Language Models, developed by Microsoft. It works with OpenAI LLMs, Hugging Face models, as well as self-hosted LLMs.

When it comes to promoting, Guidance provides a simpler, more intuitive, and rigour way to design conversations and control flow of how the language model processes the text.

Although LangChain offers quick and easy integrations to different applications, it is too encapsulated and allows no customization. Guidance is much less opinionated and offers flexibility, which we will touch upon later.

Install

GitHub — guidance-ai/guidance: A guidance language for controlling large language models.

A guidance language for controlling large language models. — guidance-ai/guidance github.com

GitHub -- guidance-ai/guidance: A guidance language for controlling large language models.

A guidance languagre for controling large language models. -- guidance-ai/guidance

https://github.com/

pip install guidance

Creating templates dynamically

Guidance uses Handlebars templating-like syntax, so if you have used Jinja2 with Flask or Go's HTML templating it should come naturally.

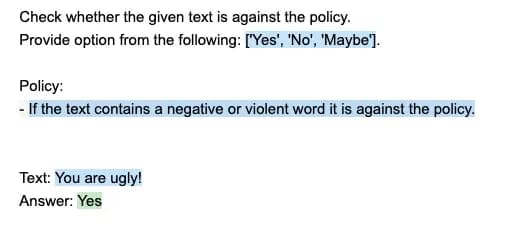

For example, we can make a profanity word detector as such.

import guidance

# choose a language model

guidance.llm = guidance.llms.OpenAI("text-davinci-003")

options = ["Yes", "No", "Maybe"]

policy_list = [

"If the text contains a negative or violent word it is against the policy.",

]

program = guidance('''

Check whether the given text is against the policy.

Provide option from the following: {{options}}.

Policy:

{{#each policy_list}}- {{this}}

{{/each}}

Text: {{sentence}}

Answer: {{select "answer" options=options}}

''')

The intuitive syntax makes the code self-explanatory. You can use variables inside and outside from {{VARIABLE}} like this. Basic text generation can be triggered with {{gen}} command. You can also have LLM select from a list of options using the {{select}} tag, which you can see is being used in the above sample. Iterables can be looped with {{#each ITERABLE}} {{/each}} . More tags are available to write complex prompts.

Running the above example gives the following output. Guidance colour codes the generated text so that it's easy to see which tokens are provided or generated.

Custom function and embeddings

You can also create and call any Python function. You can even pass generated variables as arguments.

import guidance

from qdrant import client

from utils import get_embedding

guidance.llm = guidance.llms.OpenAI("gpt-3.5-turbo")

embedding_model = "text-embedding-ada-002"

# defining custom function

def searh_db_for_related_excerpts_given(query) -> str:

"""

Search the database for the query

"""

q = get_embedding(query, embedding_model)

res = client.search(

collection_name="rulebook_collection",

query_vector=q,

limit=3

)

if len(res) == 0:

return "No results found"

return "---

".join([point.payload["text"] for point in res])

# set up guidance

sport_rule_assistant = guidance(

'''

{{#system~}}

You are a helpful and terse assitant to an athelete.

You are given a sports name, a question about the sport, and excerpts from the sport's rulebook.

Answer the question using the provided excerpts.

Show the player the excerpts used to answer the question.

If you are unable to answer the question, respond with "I don't know."

{{~/system}}

{{! Search the database for the excerpts. }}

{{#user~}}

Please answer the following question about {{sport_name}}:

{{query}}

This is the excerpts from the rulebook:

{{searh_db_for_related_excerpts_given query}}

{{~/user}}

{{#assistant~}}

{{gen 'answer' max_tokens=500}}

{{~/assistant}}

'''

)

get_embedding; creates an embedding from an input query and searh_db_for_related_excerpts_given runs a similarity search using the query through a vector database.

I am using Qdrant for the vector database. It is open source and has a nice Python client. Popular choice such as Pinecone tends to be on the expensive side so I highly recommend checking this out.

GitHub — qdrant/qdrant: Qdrant — High-performance, massive-scale Vector Database for the next...

Qdrant — High-performance, massive-scale Vector Database for the next generation of AI. Also available in the cloud...

https://github.com/

Conclusion

I like how the whole template reads like a natural text while still keeping the structure and rigour. This is my personal favourite language for prompt templating as it has a great developer experience.

I think Microsoft sees the future where we write prompts with complex logic (or are they doing it already?). Guidance gives developers the power to control LLMs with the perfect balance between flexibility and rigour.

Will Guidance become the React of LLM world? I think it is the strongest contender yet.

There is more to dive into with Guidance so I will cover more in the next blog!

P.S.

At Seeai, we help LLM-powered software development. For any question contact us here